Seeing a 500 Internal Server Error in Ollama can feel like hitting a wall—especially when you’re in the middle of testing a model or deploying a local AI-powered application. Unlike clear, descriptive errors, a 500 status code is frustratingly vague. It simply means that something went wrong on the server side. The good news? In most cases, the solution lies in backend misconfigurations or port-related issues that you can fix yourself.

TLDR: An Ollama 500 Internal Server Error is usually caused by backend crashes, port conflicts, firewall blocks, reverse proxy misconfiguration, or environment variable mistakes. Start by checking if Ollama is running and listening on the correct port, then verify that no other service is using the same port. Review logs, proxy settings, and firewall rules to isolate the issue. Most 500 errors can be resolved with proper backend and port configuration.

In this guide, we’ll explore five practical backend and port configuration solutions that will help you diagnose and fix the issue quickly and confidently.

Understanding the Ollama 500 Internal Server Error

All Heading

A 500 Internal Server Error means the server encountered an unexpected condition that prevented it from fulfilling the request. In Ollama’s case, this could happen when:

- The Ollama service crashes or fails to start

- The configured port is blocked or already in use

- A reverse proxy like Nginx is misconfigured

- System permissions prevent binding to a port

- Firewall rules block incoming requests

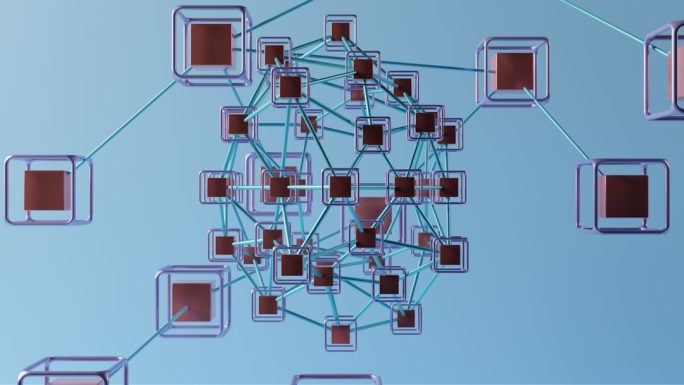

Before diving into fixes, it’s helpful to visualize how Ollama communicates within your system.

Ollama typically runs as a local backend service listening on a defined port (commonly 11434). Requests from your application, browser, or API client travel through that port. If anything interrupts this flow, a 500 error can occur.

Solution 1: Ensure Ollama Is Running Properly

The most common and simplest cause of a 500 error is that the Ollama backend process isn’t running—or it has crashed.

Step 1: Check Service Status

Use the following command in your terminal:

- macOS/Linux:

ps aux | grep ollama - Windows (PowerShell):

Get-Process ollama

If no process appears, the server isn’t running.

Step 2: Restart Ollama

Try restarting:

ollama serve

If Ollama starts successfully, test it by visiting:

http://localhost:11434

If the server responds, the issue may have been a temporary crash. If it fails immediately, inspect the logs.

Step 3: Check Logs for Crash Information

Logs often reveal memory allocation errors, corrupted model files, or port binding failures. Reviewing them can save hours of guesswork.

Pro Tip: If a specific model causes the crash, try re-pulling it:

ollama pull model-name

Solution 2: Fix Port Conflicts

Port conflicts are one of the leading causes of backend 500 errors. If another service is already using Ollama’s default port (11434), Ollama may fail silently or throw an internal error.

Step 1: Check What’s Using the Port

Run:

- macOS/Linux:

lsof -i :11434 - Windows:

netstat -ano | findstr 11434

If another service is occupying the port, you have two options:

- Stop the conflicting service

- Change Ollama’s port

Step 2: Change Ollama’s Port

You can specify a new port via environment variables:

export OLLAMA_HOST=0.0.0.0:11500

Then restart the server.

Important: If you change the port, update your API calls or frontend configuration accordingly.

Failing to synchronize your new port number with your application is a subtle mistake that triggers repeated 500 errors.

Solution 3: Verify Reverse Proxy Configuration

If you’re running Ollama behind a reverse proxy such as Nginx or Apache, configuration mistakes can result in a 500 error—even if Ollama itself is running properly.

Common Reverse Proxy Problems

- Incorrect upstream server definition

- Missing proxy headers

- Timeout settings too low

- HTTPS misconfiguration

Example Nginx Configuration

A simplified correct setup might look like this:

location / {

proxy_pass http://localhost:11434;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

}

If you see a 500 error through your domain but not through localhost, the issue likely lies in the proxy configuration.

Check Proxy Logs

Nginx logs are typically located at:

/var/log/nginx/error.log

Look for upstream connection issues or timeout warnings.

Tip: Increase proxy timeout settings if large models take time to respond.

Solution 4: Review Firewall and Network Rules

Sometimes Ollama runs perfectly—but external requests can’t reach it due to firewall restrictions.

This is especially common when:

- Hosting on a cloud server

- Running inside Docker

- Using corporate networks

Step 1: Check Local Firewall

Ensure the designated Ollama port is allowed through your firewall:

- macOS: System Settings > Network > Firewall

- Linux:

sudo ufw status - Windows: Windows Defender Firewall settings

Step 2: Cloud Security Groups

If hosted remotely (e.g., VPS or cloud VM), verify that inbound traffic on the port is allowed in security groups.

Step 3: Docker Port Mapping

If running inside Docker, ensure proper port exposure:

docker run -p 11434:11434 ollama

Without correct mapping, requests from outside the container will fail—even if the container itself works internally.

Solution 5: Check Environment Variables and Permissions

Misconfigured environment variables can silently cause 500 errors.

Environment Variable Mistakes

- Incorrect OLLAMA_HOST value

- Binding to a restricted port (below 1024)

- Incorrect IP binding (127.0.0.1 vs 0.0.0.0)

For example:

127.0.0.1allows only local access0.0.0.0allows external access

If you need external access but bind only to 127.0.0.1, requests from other machines may cause gateway or 500 errors via proxies.

Permission Issues

Binding to ports below 1024 requires elevated privileges. If you attempt to bind to port 80 without root permissions, Ollama may fail to start correctly.

Solution:

- Use a higher port (recommended)

- Or configure proper privileges carefully

A Systematic Debugging Approach

Instead of trying random fixes, use this structured method:

- Confirm Ollama is running

- Check port availability

- Test direct localhost access

- Inspect proxy configuration

- Review firewall and permissions

Working layer by layer—from backend process to network exposure—helps you pinpoint the exact failure point.

Preventing Future 500 Errors

Prevention is always better than reactive fixing. Here are a few best practices:

- Keep ports documented and reserved

- Monitor logs regularly

- Use consistent environment variable management

- Test configs after changes

- Avoid running multiple services on the same ports

You can also implement monitoring tools to detect backend crashes early.

Final Thoughts

An Ollama 500 Internal Server Error may seem intimidating at first, but it’s rarely mysterious. In most cases, the problem lies in backend availability, port conflicts, proxy setup, firewall rules, or environment misconfiguration.

By carefully checking each layer of your setup—from the Ollama process itself to network routing—you can resolve the issue efficiently. Think of the system as a pipeline: if one segment is blocked or misaligned, the entire flow breaks down.

The key is not just fixing the error—but understanding the architecture behind it. Once you do, future troubleshooting becomes faster, cleaner, and far less stressful.

Next time you see a 500 error, you’ll know exactly where to start.

Recent Comments