Artificial intelligence has come a long way in recent years, transforming how humans interact with digital technology. From voice assistants to predictive text and smart recommendations, AI is reshaping modern living. One of the more recent breakthroughs in AI capabilities is in visual understanding — the ability to interpret and analyze images. A common question that arises from this development is: Can ChatGPT read images? Let’s dive into the fascinating world of image-based AI interpretation and discover how far models like ChatGPT have come in understanding visual content.

Understanding ChatGPT’s Core Capabilities

All Heading

Traditionally, ChatGPT was designed as a text-based model, focusing on natural language processing (NLP) tasks such as writing, summarizing, translating, and answering questions. Its architecture is based on transformers, a deep learning model made famous by OpenAI’s GPT (Generative Pre-trained Transformer) family.

However, this original version had a limitation — it was trained exclusively on text data. That meant it couldn’t process any form of visual information, whether photos, graphs, or screenshots. Image recognition was instead handled by other AI models such as OpenAI’s CLIP (Contrastive Language–Image Pretraining) and by vision-related models developed by companies like Google and Meta.

Multimodal AI: Combining Text and Images

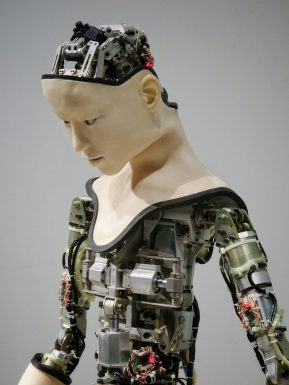

In recent developments, OpenAI introduced multimodal capabilities to some versions of ChatGPT, particularly in GPT-4 and GPT-4 Turbo. These versions can now perform vision-based tasks, allowing the model to “see” and interpret images.

But what does that really mean? It means ChatGPT can now analyze pictures, recognize objects, read text within images, describe scenery, interpret charts, and even answer questions about an image it has been shown.

This leap into multimodal AI helps bridge the gap between human-like perception and digital analysis. Using a combination of natural language understanding and visual reasoning, the model delivers more contextual and accurate responses than ever before.

How ChatGPT Reads and Understands Images

To process an image, ChatGPT relies on a combination of machine learning techniques. Here’s a simplified breakdown:

- Image Input: Users upload or share an image with the AI interface.

- Feature Extraction: The AI identifies prominent elements within the image, such as objects, text, shapes, and colors.

- Contextual Interpretation: Using its training data, the model relates those visual elements to human language concepts.

- Response Generation: ChatGPT constructs a description or answer that best aligns with the image and the user’s query.

This process mirrors how the human brain works to some extent: we observe, label, interpret, and respond — steps that ChatGPT now emulates through computational techniques.

Real-World Use Cases of Visual AI

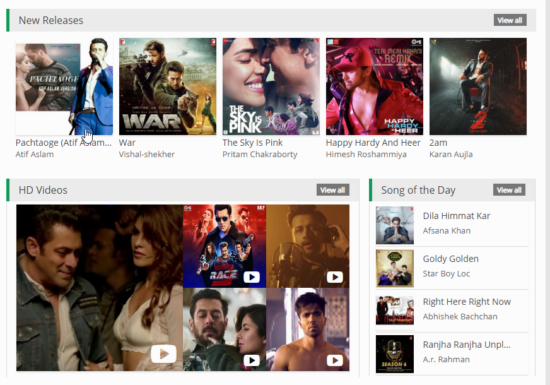

With this enhanced ability, ChatGPT becomes a powerful tool in various real-world scenarios. Some compelling examples include:

- Accessibility Assistance: Helping visually impaired users by describing images or reading text embedded in pictures.

- Education: Interpreting graphs, maps, and diagrams for students and providing explanations in simple language.

- Customer Support: Troubleshooting technical issues by analyzing screenshots or photos of devices.

- Creative Design: Assisting designers by offering feedback on layouts, recognizing aesthetic elements, or suggesting improvements.

- Medical Imaging: Assisting healthcare professionals in understanding x-rays, MRIs, and other diagnostic visuals.

The applications are expansive and continue to grow as the model becomes more refined and trained on better datasets.

Limitations of Visual ChatGPT

Despite its technological brilliance, ChatGPT’s ability to interpret images is not without limitations. Here are some worth noting:

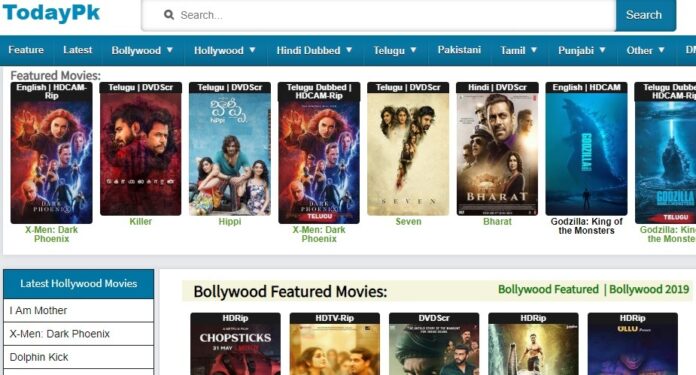

- Training Scope: The model can’t “see” or understand new visual data beyond its training. That means rare or niche pictures might lead to incorrect interpretations.

- Lack of Real-Time Vision: Unlike humans, who can process continuous visual input, ChatGPT can only analyze static images one at a time.

- No Subjective Judgement: It can describe what it sees, but it lacks emotional understanding or aesthetic judgment typical in human feedback.

- Privacy Concerns: Uploading sensitive or personal images may pose privacy risks, as AI infrastructure still raises concerns about data retention and misuse.

These limitations remind us that while AI is impressive, it’s far from replacing human perception. It serves more as an aid than a substitute.

How to Try It Yourself

Curious how to explore this feature? If you’re using ChatGPT through OpenAI’s interface (with a Plus or Pro subscription), you may gain access to the image processing feature in GPT-4 Turbo. Here’s how you can test it:

- Upload an image via the chat interface.

- Ask the AI a question about the image; for example, “What do you see here?” or “Can you read the text in this photo?”

- Watch as the model returns a detailed and contextual response based on its interpretation.

Using this simple procedure, anyone — from students to professionals — can benefit from the synergy of visual and text-based AI.

The Future of Visual AI

The integration of image reading capabilities in AI like ChatGPT signals a turning point in the AI revolution. We are moving from single-mode models to truly multimodal intelligent systems. As image recognition pairs with natural language processing, new horizons open in:

- Autonomous vehicles and robotics

- Smart surveillance and security systems

- Advanced healthcare diagnostics

- Fully immersive educational platforms

Future versions may even involve full video understanding, better scene comprehension, emotion recognition, and more proactive interpretation skills.

Summary: So, Can ChatGPT Read Images?

Yes, with certain capabilities and limitations. Thanks to the evolution of OpenAI’s multimodal models like GPT-4, ChatGPT can now analyze images, extract meaningful information, and provide valuable insights. While it’s not quite a perfect visual analyst, its ability to bridge the gap between sight and language marks a remarkable step forward.

Ultimately, this combination of image and language understanding brings humans closer to interacting with AI systems that “see” the way we do — or at least come impressively close.

Recent Comments