As artificial intelligence continues to evolve, integrating autonomous agents into production systems is no longer a futuristic concept—it’s reality. These agents, ranging from simple bots to complex multi-step learners, are transforming how businesses automate decisions and streamline processes. However, with these powerful tools come significant responsibilities. Managing hand-offs, tool integrations, and implementing solid guardrails has become crucial to ensuring that AI agents act safely, reliably, and effectively in production environments.

Understanding Agents in Production

All Heading

At its core, an AI agent operates by receiving input from a user or another system, processing that input using an underlying model or logic, and then executing a task. In production settings, this could mean responding to a customer query, making a purchase decision, or updating a database.

These agents must often deal with real-world variability, scale, and time sensitivity—challenges that are not typically present in controlled testing environments. As such, deploying an agent into a production setting isn’t merely a matter of “plug and play.” There need to be structured mechanisms for control, hand-offs, and validation.

The Importance of Hand-Offs

Hand-offs refer to the points at which control transitions between the agent and another system or human. These decision points are crucial for maintaining oversight, ensuring correctness, and building trust.

There are various modes of hand-offs in a production ecosystem:

- Automated-to-Human: When the agent reaches a confidence threshold it cannot overcome or encounters ambiguity.

- Human-to-Automated: When an operator gives the “green light” for the agent to proceed with actions post-validation.

- Agent-to-Agent: When multiple agents work together, each handling part of a task before passing it along to the next.

Clear design and rules around hand-offs help balance automation with reliability. For example, a customer service assistant might pass tricky refund requests to a human supervisor, ensuring that sensitive cases are handled with care.

Tooling: The Agent’s Arsenal

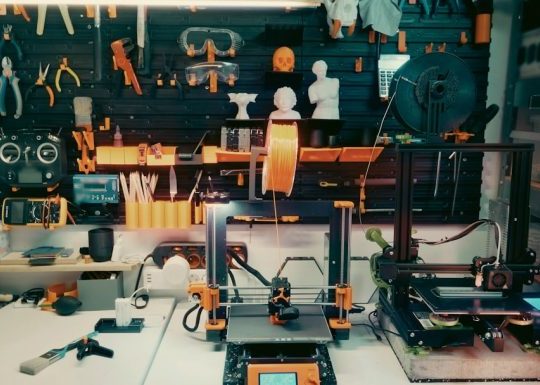

Production agents don’t act in isolation—they are usually empowered with specific tools to complete their tasks. These could be APIs, internal services, databases, or even physical interfaces.

The critical challenges here include:

- Correct Tool Selection: Agents must know which tool is suited to a specific task.

- Tool Safety: Tools must have limitations to prevent destructive behavior. For example, a “delete” API should require multiple verification steps before execution.

- Latency: Tool response time must be optimized to ensure that agents remain efficient in real-time systems.

Developers often equip agents with a set of tools via “toolkits” or “modules.” These tool integrations are designed with interfaces the agent understands, allowing it to execute actions in well-scoped ways. Some systems use a ReAct-style architecture (reasoning and acting) where the agent evaluates its context before choosing and deploying a tool.

Guardrails: Keeping Agents on Track

Guardrails are protective measures that constrain agent behavior to keep it safe, ethical, and aligned with business or user goals. Without guardrails, even highly intelligent agents can make costly or dangerous mistakes in production.

Guardrails come in various forms:

- Behavioral guardrails: Limit the scope or nature of agent actions. For instance, an agent trained on internal email responses should never generate public-facing marketing messages.

- Ethical guardrails: Prevent agents from producing biased, offensive, or misleading content. These often involve content moderation filters and fairness checks.

- Operational guardrails: Include rate limits, logging, rollbacks, and circuit breakers to manage technical performance and reduce risk.

One innovative approach is using a feedback loop where the agent’s performance is constantly monitored and refined based on user feedback or internal metrics. Tools like reinforcement learning with human feedback (RLHF) enable supervised learning that evolves with user expectations and operational insights.

Common Architectures and Frameworks

There are several frameworks that support building and running agents in production. Some of the popular ones include:

- LangChain: Allows you to connect language models with external tools, databases, and APIs.

- Auto-GPT: Designed for agents that can pursue long-term goals by self-prompting and planning.

- OpenAI Function Calling: Provides a formal structure for integrating functions into LLMs with schema validation.

These frameworks often encourage a modular agent design where core components—memory, action, reasoning, and interface—can be swapped or upgraded independently. This modularity aids in sandbox testing and helps isolate failures during production faults.

Real-World Use Cases

Many industries are already leveraging AI agents in production. Here are a few illustrative examples:

- Customer Service: Autonomous agents handle tier-1 tickets, escalating only complex issues to human reps.

- DevOps Management: AI assistants perform health checks, restart services, and notify developers of anomalies.

- Finance: Agents review and summarize financial data, flag anomalies, and even author initial drafts of insights.

These implementations illustrate the spectrum of agent capabilities—from decision support to task automation.

Challenges and Future Directions

Despite the advancements, several challenges remain:

- Scalability: Ensuring that agents perform under heavy loads without latency issues or errors.

- Transparency: Making agent decisions interpretable to users and auditors.

- Security: Preventing malicious exploitation of agents through prompt injections or faulty tool design.

The future may bring agents that are more cooperative, context-aware, and legally accountable. Emerging areas like explainable AI (XAI) and multi-agent coordination systems are actively being developed to tackle these evolving demands.

Conclusion

Integrating agents into production settings is both an opportunity and a responsibility. By effectively managing hand-offs, equipping agents with the right tools, and enforcing strict guardrails, organizations can harness the full potential of AI-powered automation without compromising on safety, ethics, and performance. As tools and frameworks continue to mature, production-grade agents will become indispensable allies across industry verticals.

Frequently Asked Questions (FAQ)

- Q: What is the difference between a bot and an agent?

A bot typically follows predefined scripts or rules, whereas an agent has reasoning capabilities and can choose among tools or actions based on the situation. - Q: How do I know when to involve a human in an AI system?

Use confidence thresholds, ambiguity scores, or performance metrics to trigger human-in-the-loop interventions when the agent is unsure or potentially risky. - Q: Are agents secure for use in finance or healthcare?

With the right guardrails, audit trails, and compliance enforcements, agents can be deployed securely in sensitive fields. However, regular reviews are essential. - Q: What happens when an agent fails or produces bad output?

Production systems should include fail-safes such as rollbacks, alerts, and human override mechanisms to catch and correct such issues. - Q: Can multiple agents work together?

Yes. Multi-agent systems are increasingly popular, where specialized agents coordinate in a pipeline or network to solve complex tasks collaboratively.

Recent Comments