In recent years, the emergence of AI-powered voice assistants has dramatically altered how we interact with technology. These intelligent systems are now integrated into smartphones, smart speakers, cars, and even home appliances, providing users with hands-free convenience and real-time assistance. But how do they really work, and what makes them so effective? Understanding the inner workings of AI-powered voice assistants is essential to appreciating their transformative potential and the future they promise in human-machine interaction.

What Are AI-Powered Voice Assistants?

All Heading

An AI-powered voice assistant is a digital assistant that uses artificial intelligence, natural language processing (NLP), and machine learning (ML) to understand spoken commands and perform tasks. These assistants can carry out a variety of functions — from setting reminders and sending messages to controlling smart home devices and answering general questions.

Popular examples include:

- Amazon Alexa

- Apple Siri

- Google Assistant

- Microsoft Cortana (its consumer deployment has been reduced, but the underlying technology still powers other services)

Voice assistants have evolved from simple voice command tools into complex, multi-functional platforms capable of contextual understanding and personal adaptability.

The Core Technologies Behind Voice Assistants

To grasp how these systems work, it’s important to break down the underlying technologies that enable them. Here’s a look at the key components involved in AI-powered voice assistants:

1. Voice Recognition (Automatic Speech Recognition – ASR)

This is the first stage where the assistant captures and converts spoken language into text. Systems like Google’s and Amazon’s ASR engines process real-time audio streams and transcribe them into machine-readable data. This component forms the foundation for understanding user intent.

2. Natural Language Processing (NLP)

Once speech is converted to text, NLP takes over. This branch of artificial intelligence interprets the meaning behind the words. NLP breaks down sentences into grammatical elements and extracts intents and entities from the user’s statement. For example, in the command, “Set an alarm for 7 AM,” NLP identifies that the user wants to set an alarm and recognizes “7 AM” as the time.

3. Natural Language Understanding (NLU) and Natural Language Generation (NLG)

These two subfields of NLP work in tandem. NLU enhances the system’s capability to comprehend complex, nuanced language. NLG helps the assistant generate human-like responses. This enables the assistant to maintain conversation flow and appear more “intelligent.”

4. Machine Learning (ML)

Over time, voice assistants improve by learning from interactions. ML algorithms study usage patterns, correct misunderstandings, and predict user preferences. Through techniques like supervised learning and unsupervised learning, the assistant becomes more intuitive.

5. Text-to-Speech (TTS) Conversion

Finally, the assistant converts the generated textual response back into speech. Advanced TTS engines produce natural, emotionally nuanced voices, making the experience more engaging for users.

How Do They Work Step-by-Step?

Let’s walk through a general process of how a voice assistant interprets and responds to a user query:

- Wake Word Detection: The assistant listens passively for a specific trigger phrase such as “Hey Siri” or “Okay Google.”

- Speech Capture and Processing: Once triggered, it begins recording your voice and sends the audio to its cloud servers for interpretation.

- Speech-to-Text Conversion: In the cloud, ASR technology translates the spoken words into text.

- Intent Analysis: NLP and NLU process the text to determine what action is being requested.

- Action Execution: The assistant then executes the action — whether it’s controlling a smart bulb or placing a calendar reminder.

- Response Generation and Playback: The system uses NLG to craft a verbal response, which is then converted to audio via TTS and played back to the user.

From start to finish, this process often takes just a few seconds, showcasing the efficiency of today’s advanced computational systems.

Use Cases in Everyday Life

AI-powered voice assistants are becoming increasingly integrated into daily routines. Below are some common use cases:

- Smart Home Integration: Controlling lights, locks, thermostats, and security systems with voice commands.

- Personal Productivity: Managing calendars, setting alarms, dictating emails, and sending messages.

- Entertainment: Playing music, streaming videos, and recommending content based on preferences.

- Navigation: Providing real-time traffic updates and navigation while driving.

- Shopping and Banking: Reordering products, checking account balances, and tracking deliveries.

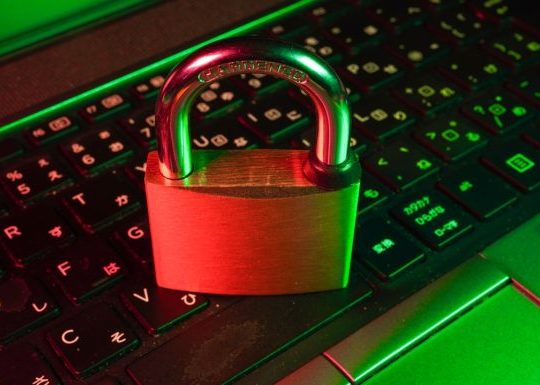

Security and Privacy Considerations

While the convenience of AI-powered voice assistants is undeniable, concerns about privacy and data security should not be overlooked. These systems collect and store audio data, which can include sensitive personal information. Companies like Apple and Google provide some control mechanisms, allowing users to delete stored conversations, disable certain features, or opt out of data collection.

However, the degree of transparency around what is retained and how it is used varies by provider. It is essential for users to regularly review privacy settings and understand the data governance policies enforced by their voice assistant platforms.

The Evolution and Future of Voice Assistants

As AI continues to develop, voice assistants are expected to become more capable, conversational, and context-aware. Upcoming enhancements may include:

- Multi-language fluency: Assistants becoming fully bilingual or multilingual in real-time conversations.

- Emotional intelligence: Detecting user emotions from tone and delivering empathetic responses.

- Greater personalization: Adjusting voice, conversation style, and content delivery based on past behavior and preferences.

- Offline capabilities: Local AI processing reducing dependency on cloud-based systems, increasing speed and privacy.

Companies are also investing in voice biometrics to authenticate users more effectively, reducing the risk of fraud and strengthening security layers.

Challenges and Limitations

Despite their impressive capabilities, AI-powered voice assistants still face several hurdles:

- Accents and Dialects: Understanding regional variations in language remains a technical challenge.

- Background Noise: High levels of ambient noise can impair recognition accuracy.

- Context Limitations: Current systems still struggle with maintaining long-term context or understanding vague references.

- Data Dependency: Over-reliance on cloud connectivity limits their performance in disconnected environments.

Addressing these issues requires continuous improvements in AI models, edge computing, and device-level processing power.

Conclusion

AI-powered voice assistants are not just a novelty; they represent a fundamental shift in how humans engage with machines. Their combination of sophisticated technologies — including NLP, ML, and TTS — enables a level of interaction that is both powerful and intuitive. As they continue to evolve and become more integrated into our lives, maintaining a balanced perspective on their benefits, risks, and limitations will be crucial.

Informed users, transparent development practices, and ongoing technological innovation will ensure that voice assistants remain valuable tools that enhance productivity, convenience, and accessibility in the digital age.

Recent Comments