As local AI tools continue to evolve, many users are asking whether LM Studio can generate images in addition to running large language models. Known primarily for enabling users to run language models locally on personal hardware, LM Studio has rapidly expanded its feature set through 2024 and 2025. By 2026, the platform supports significantly broader multimodal capabilities, raising the question: can it truly handle AI-powered image generation? The answer depends on model compatibility, hardware, and how LM Studio fits into the broader AI workflow.

TLDR: LM Studio was originally designed to run large language models locally, but by 2026 it supports certain multimodal and image-generation capable models depending on user hardware and setup. While it is not a dedicated image-generation app like some cloud platforms, it can run diffusion-based and vision-enabled models when properly configured. GPU power, VRAM availability, and compatible model formats are key factors. Its image-generation capabilities are most powerful in advanced or integrated workflows.

What Is LM Studio Designed For?

All Heading

LM Studio began as a desktop application that allows users to download, manage, and run large language models (LLMs) locally. Rather than relying on cloud APIs, users can operate AI models fully offline, which appeals to developers concerned with privacy, speed, and cost control. Its core features historically included:

- Running quantized LLMs locally

- Chat-style interfaces for prompts

- API server mode for local development

- Model management and downloading tools

Originally, LM Studio focused primarily on text-based models such as LLaMA variants, Mistral, and other open-weight language models. However, as multimodal AI gained momentum, demand increased for systems capable of handling not only text but also images and vision-based inputs.

Can LM Studio Generate Images?

The short answer is: yes, but with limitations and specific model requirements.

By 2026, LM Studio supports certain image-generation-capable models, particularly those built on diffusion architectures or multimodal LLM frameworks that combine text and visual outputs. However, image generation is not its primary focus. Instead, it acts as a flexible local runtime environment where compatible models can be loaded.

There are two main approaches through which LM Studio can handle image-related tasks:

- Running diffusion models: If packaged in supported formats and compatible with local hardware.

- Using multimodal models: Text-and-image models that can interpret, generate, or describe visual content.

That said, users expecting a simplified image-generation workflow similar to browser-based AI art tools may find LM Studio more technical. It often requires manual configuration, hardware awareness, and understanding of model formats.

Supported Image-Capable Models in 2026

LM Studio’s compatibility depends heavily on:

- Model format (GGUF, safetensors, etc.)

- Backend inference engine support

- GPU acceleration capabilities

By 2026, several categories of models may be used within or alongside LM Studio:

1. Diffusion-Based Image Models

Stable Diffusion variants and lightweight diffusion models can be integrated if properly configured. While LM Studio is not exclusively built for diffusion pipelines, users have increasingly run optimized versions locally using supported runtimes.

These models allow:

- Text-to-image generation

- Image-to-image transformations

- Style-based image outputs

2. Multimodal Large Language Models

Vision-language models (VLMs) such as LLaVA-style frameworks or newer multimodal LLMs allow:

- Image captioning

- Image analysis and interpretation

- Limited image-based generation tasks

These models often require more VRAM than text-only models, making hardware one of the most important considerations.

3. Hybrid AI Workflows

Some users use LM Studio as part of a larger AI toolchain. For instance:

- LM Studio handles advanced prompt engineering locally.

- A dedicated image-generation engine processes the visual request.

- Results are reintegrated into a workflow or preview panel.

This modular workflow has become more common in 2026 as users combine local AI tools for maximum flexibility.

Hardware Requirements for Image Generation

One of the most important factors determining LM Studio’s image-generation capability is hardware.

For stable and fast performance, users typically need:

- Modern GPU: NVIDIA RTX or equivalent with CUDA support

- At least 8–16GB VRAM: More for high-resolution outputs

- 16–32GB system RAM: Recommended for larger models

- SSD storage: Fast loading of large model files

Text-based LLMs can run on modest setups, particularly when quantized. However, image generation requires significantly more computational resources due to pixel-level processing and diffusion cycles.

How LM Studio Compares to Dedicated Image AI Tools

It is important to distinguish LM Studio from platforms built exclusively for visual generation. Dedicated image tools often offer:

- Simplified graphical interfaces

- Built-in upscaling and editing tools

- Preset artistic styles

- Cloud-based scalability

LM Studio, in contrast, prioritizes local control and flexibility. For developers and privacy-conscious professionals, this tradeoff is often worthwhile.

Advantages of using LM Studio for image generation include:

- No cloud dependency

- No usage fees after model download

- Full local data control

- Custom model experimentation

Limitations may include a steeper learning curve and less visual polish compared to consumer-oriented platforms.

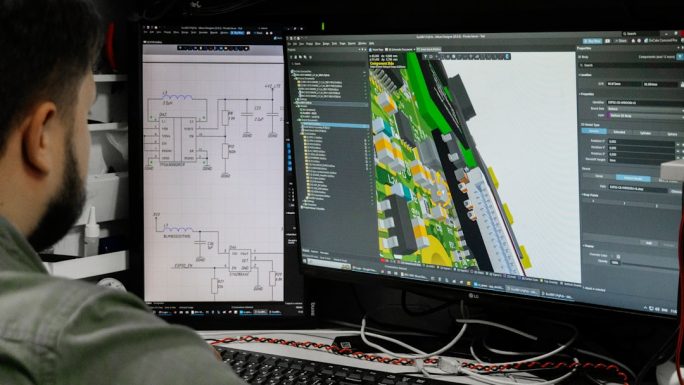

AI Image Workflow Inside LM Studio

A typical 2026 workflow for generating images within or through LM Studio might look like this:

- User selects a compatible diffusion or multimodal model.

- Text prompt is crafted in the chat or developer console interface.

- Model processes prompt using GPU acceleration.

- Output image is rendered and optionally saved or exported.

Advanced users can also modify inference parameters such as:

- Sampling steps

- Guidance scale

- Image resolution

- Seed values for reproducibility

These controls make LM Studio attractive to technically inclined users who want fine-tuned outputs.

Multimodal Capabilities Beyond Generation

Image generation is not the only visual capability available in LM Studio by 2026. Multimodal systems can also:

- Interpret uploaded charts or screenshots

- Analyze photographs for descriptive summaries

- Assist developers with UI mockup reviews

- Generate structured descriptions for datasets

In professional environments, these functionalities are often more valuable than raw image synthesis. Designers, engineers, and researchers can use locally deployed models to process sensitive visual materials without transmitting files to external servers.

Are There Any Limitations in 2026?

Despite progress, LM Studio still has practical constraints:

- Not all image models are plug-and-play.

- Large vision models may exceed consumer GPU memory.

- Setup complexity can be intimidating for beginners.

Additionally, while constant updates improve compatibility, LM Studio’s primary identity remains centered around local LLM management. Image generation is powerful but secondary to its text-based capabilities.

The Future of LM Studio and Image AI

Looking beyond 2026, there is a clear trend toward fully multimodal local AI environments. Many open-weight models now combine:

- Text generation

- Image understanding

- Image creation

- Possibly even audio and video synthesis

As unified model architectures mature, tools like LM Studio are expected to offer deeper native support for visual generation without requiring complex configuration. Improved hardware efficiency and better quantization methods may also reduce system requirements.

For now, LM Studio represents a powerful, privacy-focused platform capable of image generation when supported by compatible models and suitable hardware. It is best suited for technically minded users who value control over convenience.

Frequently Asked Questions (FAQ)

1. Can LM Studio generate images by default?

No, it does not automatically generate images out of the box. Users must load compatible diffusion or multimodal models that support image generation.

2. Does LM Studio support Stable Diffusion?

Support depends on model packaging and backend compatibility. With proper configuration and sufficient GPU resources, Stable Diffusion variants can be run locally.

3. How much VRAM is required for image generation?

Most modern image-generation models require at least 8GB of VRAM, though 12–16GB or more is recommended for higher resolutions and faster performance.

4. Is LM Studio better than cloud-based image tools?

It depends on user priorities. LM Studio offers privacy and local control, while cloud tools provide convenience, simplicity, and scalable performance.

5. Can LM Studio analyze images as well as generate them?

Yes, when using multimodal models, it can interpret and describe images, provided the model supports vision input processing.

6. Is LM Studio beginner-friendly for image creation?

It is more suitable for advanced users or developers. Beginners may find dedicated visual AI tools easier to use initially.

7. Will LM Studio expand its image capabilities in the future?

Given the industry’s move toward multimodal AI systems, expanded and simplified image support is highly likely in future updates.

Recent Comments